Sidebar

<latex>{\fontsize{16pt}\selectfont \textbf{Localization & Motion Detection using a

2D Laser Rangefinder}} </latex>

<latex>{\fontsize{12pt}\selectfont \textbf{Alessandro Simovic}} </latex>

<latex>{\fontsize{10pt}\selectfont \textit{Semester Project ME}} </latex>

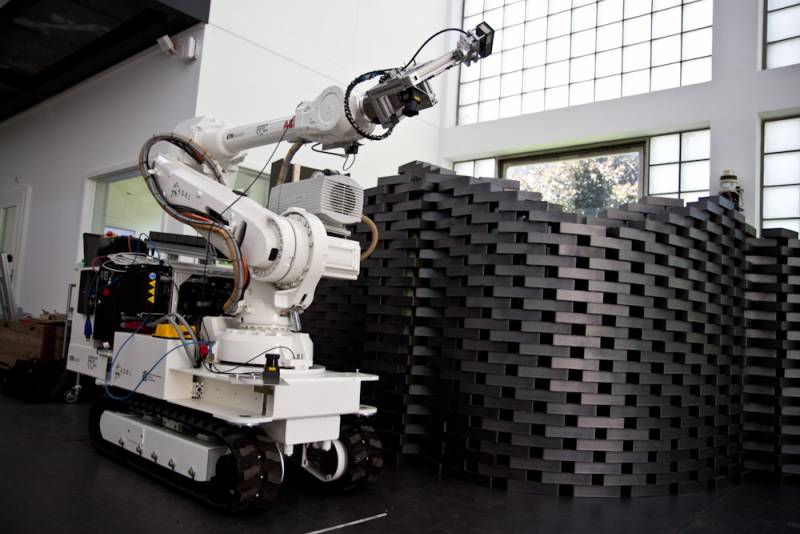

The In-Situ Fabricator

The In-Situ Fabricator

<latex> {\fontsize{12pt}\selectfont \textbf{Abstract} </latex>

In this thesis we show how a 2D laser rangefinder can be used as a tool for robot localization. The Kernelized Rényi Distance provides a method for calculating the entropy of subsequent laser scans. Minimizing the entropy yields an estimate of the current robot pose. An Extended Kalman Filter is deployed as an estimator for the robot trajectory, which fuses observations from the entropy minimization together with odometry mea- surements given by the hydraulic system. A qualitative analysis shows the localization improvements owing to the entropy based laser scan registration.

<latex> {\fontsize{12pt}\selectfont \textbf{System overview} </latex>

The In-Situ Fabricator is a building robot for construction sites. The tracks, driven by a hydraulic system, allow the robot to move around in its environment. The platform consists of a base with the integrated drive system for the tracks and an ABB manipulator arm mounted on top of the base. In addition to the sensors already integrated into the hydraulic system the robot was also equipped with a Hokuyo UTM-30LX-EW laser rangefinder, specifically installed for the purpose of this project.

<latex> {\fontsize{12pt}\selectfont \textbf{Goal} </latex>

With the mid-term goal of having the robot support workers on a real construction site there are a couple

of mandatory features for the robot, among which there is localization and workspace

safety. Localization is a general requirement for any autonomous robot and in this case

it will also facilitate the implementation of safeguards for protecting persons and other

robots in the shared workspace by performing motion detection. Prior to this project the robot estimated its

position using odometry data only. We improved the localization by adding observations from the entropy based laser scan registration

and combining them with the odometry data using an Extended Kalman Filter (EKF).

<latex> {\fontsize{12pt}\selectfont \textbf{Entropy based scan registration

} </latex>

The Hokuyo rangefinder used for this project delivers 2D laser scans with a rate of 40Hz. The idea behind

entropy based scan registration is to use a sequence of these scans, match them and identify the relative robot movement

between the acquisition of the scans. The underlying problem can be formulated as

the attempt to estimate the current robot pose $P_k$ given the previous robot pose $P_{k-1}$

in such a way that the current laser scan, transformed according to its corresponding

pose $P_{k}$ , matches the preceding laser scan acquired at $P_{k-1}$ as well as possible. The

exactness of the matching can be quantified with an entropy method building upon the

Kernelized Rényi Distance 1).

Minimizing the entropy of two matched laser scans thus yields optimal alignment between these two scans,

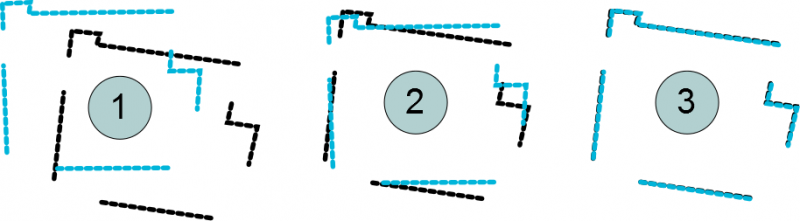

yielding an estimate of the new position relative to the previous one. The iterative process of aligning two scans is visualized below, where the alignment improves from step 1 to 3 and the two scans are marked by blue and black dots respectively:

Mathematically the Kernelized Rényi Distance can be formulated as follows

\begin{equation*} E(\mathbf{L}, \mathbf{\Omega}) = - \sum_j^M \sum_i^N \exp \left( \frac{-d_{ij}^T d_{ij}}{4 \sigma^2} \right) \label{eq:entropy} \tag{1} \end{equation*}

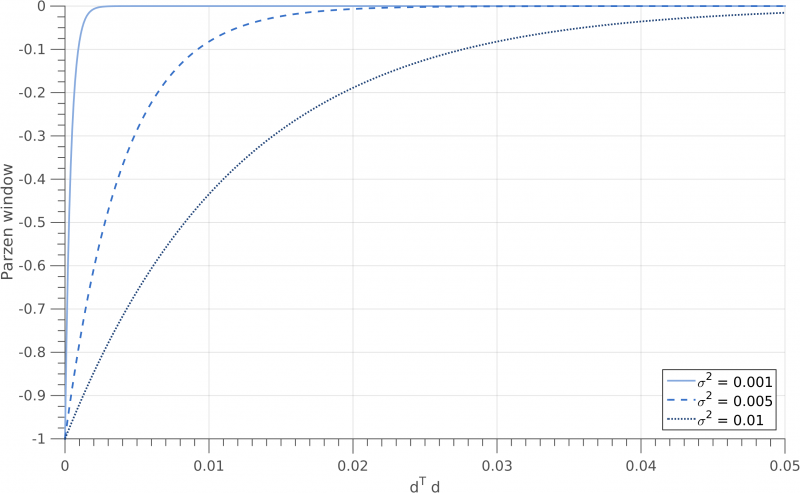

where $d_{ij} = L_j - \Omega_i$ is the distance between two points $j$ and $i$ in the reference and source scans $\mathbf{L}$ and $\mathbf{\Omega}$. $M$ and $N$ correspond to the respective number of points in each of the scans. The only parameter of the entropy computation is the Parzen window width $\sigma^2$ which defines the steepness of the exponential kernel function as shown in the figure below for three different values of $\sigma^2$ . In this sense the window width affects how a point $j$’s neighbours influence the cost function based on their squared distances $d^T_{ij} d_{ij}$ to that point. Large values for $\sigma^2$ cause points further away to have more weight due to the kernel function’s more gradual slope. At the same time the exponential kernel can flatten out if $\sigma^2$ is chosen too large and all the point’s neighbours $i$ might have almost the same weight regardless of their actual distances $d_{ij}$ . In contrast a minuscule value for $\sigma^2$ causes a rapid convergence of the exponential function, as visualized by the solid curve in the figure below, in which case only a point’s immediate neighbours add to the cost function.

With the entropy formulation given in eq. 1, the problem of finding the current pose given the previous one can be written as

\begin{equation*} \hat{P}_k = \arg \min_{P_k} E( \mathbf{L}, \mathbf{\Omega}} \tag{2} \end{equation*}

For a thorough but easy to follow derivation of eqs. 1 and 2 see the work of Sheehan et al. 2).

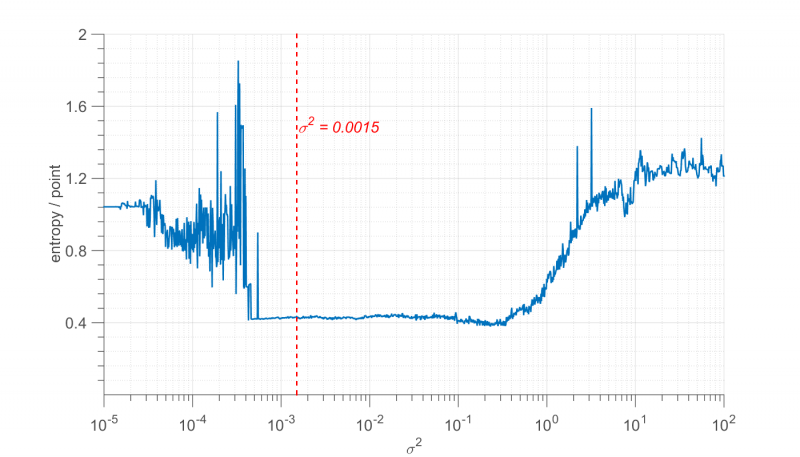

<latex> {\fontsize{12pt}\selectfont \textbf{Parameter search for optimal Parzen Window width $\sigma^2$} </latex>

Part of this project was to find proper values for $\sigma^2$ in eq. 1. On the implementation side we used K-Nearest-Neighbour Search in order to identify neighbours $i$ of a point which a significant weight, meaning points that lie inside the Parzen Window's range $[-1, -0.01]$. Then only these relevant points are considered when computing the sum in eq. 1, which allows to drastically reduce the computation. Therefore we are looking for values of $\sigma^2$ which are as low as possible while still providing a good accuracy of the entropy minimization.

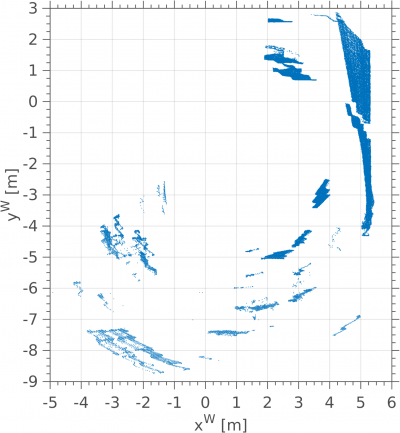

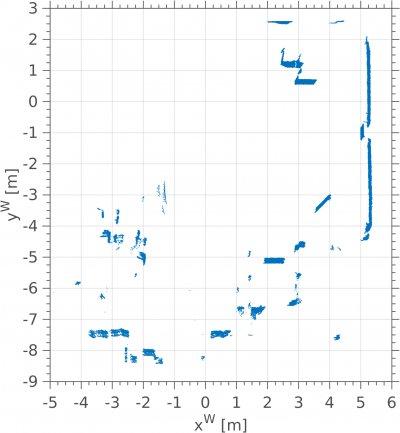

Entropy can be seen as a measure for disorder. Since we want to align consecutive laser scans as good as possible, we can optimize the alignment by first transforming all laser scans from a specific time period into a world-fixed frame, merge them and then compute the entropy of the resulting pointcloud 3). Such merged pointclouds are shown below for two different values of $\sigma^2$:

Clearly the figure on the right side shows a crispier image, meaning that the alignment worked better compared to the graphic on the left. Plotting the total entropy versus different values of $\sigma^2$ as below, we can identify “good” values.

For small values of $\sigma^2$ the figure above shows erratic behaviour of the entropy registration. The problem is the Parzen Window width $\sigma^2$ being too small, in which case not even a points closest neighbours have an effect on the entropy calculation. The entropy registration then performs rather poorly.

The next range $[0.0006, 0.2]$ results in the most accurate scan matching. We picked $\sigma^2 = 0.0015$ such that there is a small distance to $0.0006$, which improves the robustness of the registration algorithm. On a sidenote, the entropy registration becomes less accurate for larger Parzen Window widths $\sigma^2$ because then the exponential kernel function flattens out, causing all points to have almost identical weight. In that case the whole entropy registration aligns the pointclouds' centers of gravity.

<latex> {\fontsize{12pt}\selectfont \textbf{Localization performance} </latex>

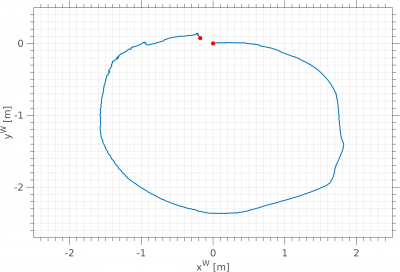

At the time of this project we had no possibility to record or create ground truth data, to which we could compare the estimated positions. In an attempt to at least make a qualitative statement about the performance of the Extended Kalman Filter and its estimate, we piloted the robot and tried to drive on a circular trajectory with identical start and end positions. The trajectory to the right is the result of the full localization algorithm, i.e. the scans were matched using entropy registration and the resulting observations were fused with the odometry data using the Extended Kalman Filter. The estimated trajectory is very closed to a closed circle and it is hard to tell if the pilot can be accounted for the the gap between start and end point or if the error is caused by the Filter. Nevertheless the results are a lot better compared to a scenario where only odometry data is being used for the localization.

<latex> {\fontsize{12pt}\selectfont \textbf{Motion Detection} </latex>

Due to limited time for this project and the extensive programming work required to make the entropy based scan registration work quick and efficient, motion detection quickly became a secondary goal in this project and as such the following proposed method for motion detection is not optimal, yet.

The strategy we employed is to discretize the environment into a world-fixed grid and keep track of the average point density in each cell over time. For this we first have to transform the laser scans to the world frame, which can be done using the estimated trajectory provided by the Extended Kalman Filter. For each grid cell and transformed laser scan the number of points falling into a specific cell is computed and stored using a moving average filter. The average point density of each cell can then be used to measure different events, including

- Point density 0: No objects detected in a cell

- Current point density lower than average: Some object has left that cell

- Current point density same as average: Object in cell did not move

The algorithm successfully detects motion, but also involves a few shortcomings:

- No classification as “static” or “dynamic” object. A (static) wall vanishing from the field of view results in a reduced density in the respective cell and will be flagged as motion.

- Density of cells with objects is based on the object's size, but also its distance to the robot. The closer the robot and the laser rangefinder to an object, the more points will be reflected by that object, resulting in a higher density.

<latex> {\fontsize{12pt}\selectfont \textbf{Future Work} </latex>

- More sensors: For this project only one laser rangefinder was mounted on the robot base. In the future we envision a system with four scanners, one in each corner of the robot.

- Parameter estimation: Once we have the possibility to record ground truth data, the next step would be to compute better estimates of parameters used in the robot model as well as adjust the system covariances used in the Extended Kalman filter for optimal results.

- Make motion detection algorithm more sophisticated. A model based on probability and likeliness of observations would likely increase the performance. Another option would also be to create and maintain a map of static objects, such that they won't be flagged as movement only because they leave the robot's field of view. Adding more sensors should also help with this problem as the robot would then have access to a full $360^\circ$ view of the surrounding environment, resulting in fewer objects leaving and reappearing in its field of view.