Sidebar

<latex>{\fontsize{16pt}\selectfont \textbf{Optimization of Stereotypical Trotting Gait on HyQ}} </latex>

<latex>{\fontsize{12pt}\selectfont \textbf{Brahayam David Ponton Junes}} </latex>

<latex>{\fontsize{10pt}\selectfont \textit{Master Project RSC}} </latex>

<latex> {\fontsize{12pt}\selectfont \textbf{Abstract} </latex>

Over the last decades, locomotion of legged robots has become a very active field of research, because of the versatility that such robots would offer in many applications. With very few exceptions, in general, legged robot experiments are performed in controlled lab environments. One of the reasons of this limited use is that in real world environments, legged robots have to interact with an unknown environment, and in order to do it successfully and safely, they need to be compliant, such as humans and animals are. In the context of this project, a framework for learning an optimized stereotypical trotting gait for the Hydraulic Quadruped robot HyQ using variable impedance is proposed. This is an important step towards closing the gap between robot capabilities and nature’s approach for animal locomotion.

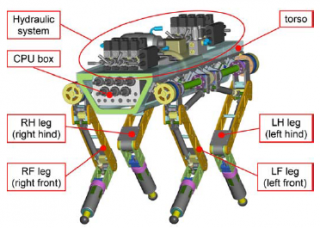

<latex> {\fontsize{12pt}\selectfont \textbf{Hydraulic Quadruped Robot - HyQ} </latex>

<latex> {\fontsize{12pt}\selectfont \textbf{Reactive Controller Framework - IIT} </latex>

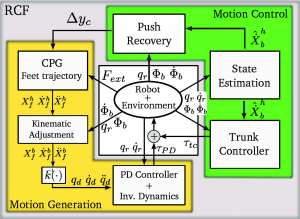

| RCF modules | Biref overview of RCF modules (Picture from IIT) |

|---|---|

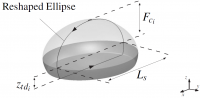

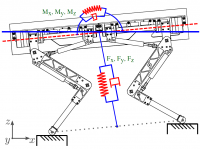

| In this project, the base controller over which the parameter optimization will be performed is the Reactive Controller Framework 1). The Reactive Controller Framework has been designed for robust quadrupedal locomotion. It is composed of two modules. The first one is dedicated to the generation of elliptical trajectories for the feet, whereas the purpose of the second one is the control of stability of the robot. |  |

<latex> {\fontsize{12pt}\selectfont \textbf{Learning and Control Strategy} </latex>

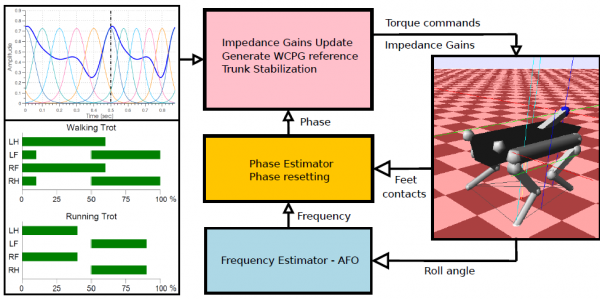

This section provides a description of the design and control architecture implemented for gait optimization using the reinforcement learning algorithm PI2. The learning algorithm is built on top of the physics and control environment SL (Simulation Laboratory), and using the Optimization engine using reinforcement learning algorithms.

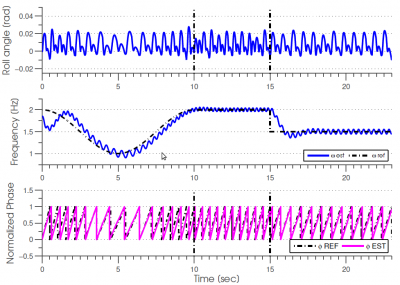

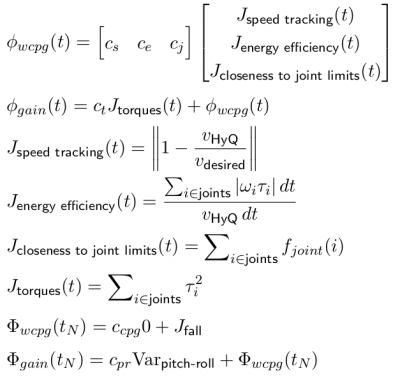

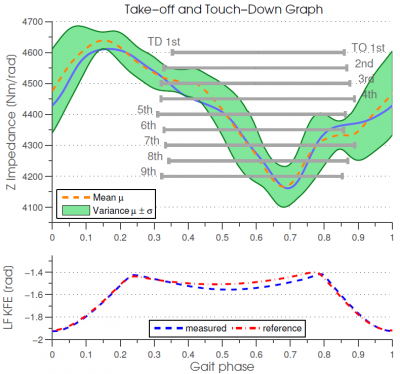

A brief picture of the learning and control setup consists in the following key ideas. First, online learning of frequency and phase is performed to synchronize the feedback control policies (Control and Adaptation Layer) 2) 3). Frequency identification is performed by using frequency oscillators and synchronizing them to the roll angle of the robot (a periodic variable of the locomotion gait carrying information about its frequency). This identified frequency and a phase resetting mechanism 4) are used for identifying the phase of the system. The phase resetting mechanism performs an event-based correction, using the feet contacts as events. Then, by means of executing and evaluating roll-outs, the feedback control policies for variable impedance control and trunk stabilization are tested and improved (Learning Layer). A roll-out is a single execution of the policy parameters.

<latex> {\fontsize{12pt}\selectfont \textbf{Experiments and Results} </latex>

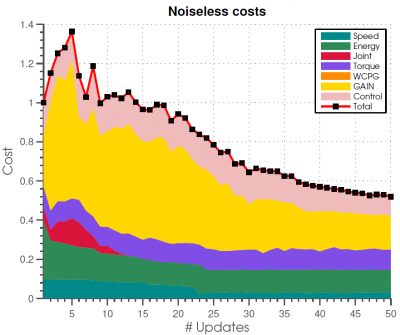

This section will present some of the experiments and results obtained in simulation.

<latex> {\fontsize{12pt}\selectfont \textbf{Conclusions} </latex>

* The algorithm optimizes directly feedback terms by learning variable impedance schedules for the robot-environment interaction, and trunk stabilization parameters. It also learns indirectly feed-forward terms by optimizing the WCPG parameters that generate the desired feet elliptical trajectories. It has been shown that the algorithm has scaled very well to this very high dimensional problem, that optimizes the parameters for the entire locomotion cycle (stance and flight phase).

* The learning algorithm has generated policies for different locomotion speeds, achieving a stable locomotion gait with limit cycle and an energy efficient locomotion frequency.

* The issue of specifying a target impedance is not trivial, therefore learning is necessary. The learning algorithm has learned a variable impedance schedule, that gives the robot the compliance needed for the interaction with the environment. It provides enough stiffness during swing phase and compliance during stance phase, trading off in this way, the leg objectives of high performance trajectory tracking and robustness for the interaction with the environment.

* The algorithm has not been tested in the real robot, therefore, the next step, in order to validate the results obtained in this project, will be to perform learning on the real robot. This will allow to push HyQ to its performance limits, taking into account also not modelled dynamics.