Sidebar

Table of Contents

Author

I-Lin Fang (ifang@student.ethz.ch)

Supervisor: Timothy Sandy

Motivation

In the application of robot manipulators, it is very important to accurately estimate the motion of an end-effector. To precisely reach a target location, visual-inertial (VI) sensors, which are mounted on the end-effector, are widely used. While the visual sensor can measure the position more accurately than the inertial sensor, the problem of the large latency caused by image-processing should be addressed. The estimator hence needs to rely on the relatively inaccurate inertial sensor data, before receiving the next visual measurement. As a result, the state estimates are generated by fusing both visual and inertial sensors' data in most of estimators.

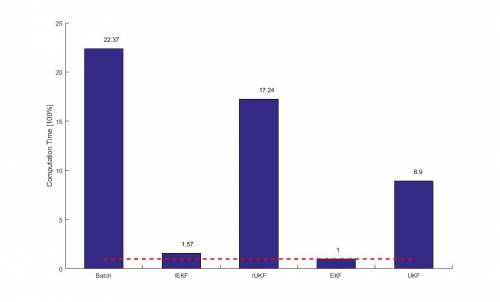

However, due to limitations in computational speed and available memory, we have to do a cruel trade-off between the algorithm's computation time and the state estimate's accuracy. The following Figure illustrates the existing KFs' performances. It also shows that EKF and iEKF enjoy a high computational speed, while suffering from less accurate results and MHE's accuracy is higher, but it needs plenty of time to compute the results. Our goal is, therefore, to find another method, which can not only provide an accurate estimation, but also finish the computation within the available time interval of our system. In this project, we chose to implement another version of KF, namely unscented Kalman filter(UKF), and hopefully it would achieve our goal.

UKF State Estimation

The UKF inherits the EKF's approximation assumption, which represents the state distribution by a Gaussian Random Variable (GRV), while the UKF passes a minimal set of sample points instead of only one mean point in the EKF. These sample points can capture the mean and covariance of the GRV more accurately than the EKF, especially when applied to a non-linear system.

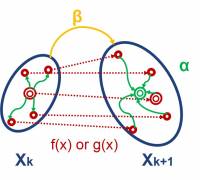

In conclusion, our estimator initially samples several sigma points around the previous mean state. The number of sigma points depends on the length of the estimated state ($L$), and the sampling region is additionally affected by the previous covariance ($\bm{ \hat{P} }_{k-1}$), the measurement noise ($\bm{Q}_k$ or $\bm{R}_k$) and the parameter $\alpha$. Every time a new visual measurement comes, our estimator will first process each sigma point by the queue of inertial sensor data, which was received during this updating interval, and finally update the state estimate by the latest visual sensor data. In the end of these propagating processes, there are several weights, which are determined by the parameters $\alpha$ and $\beta$, to recombine every passed sigma point into the new state estimate.

Experimental Results

In this section, the experimental results of UKF will be presented.

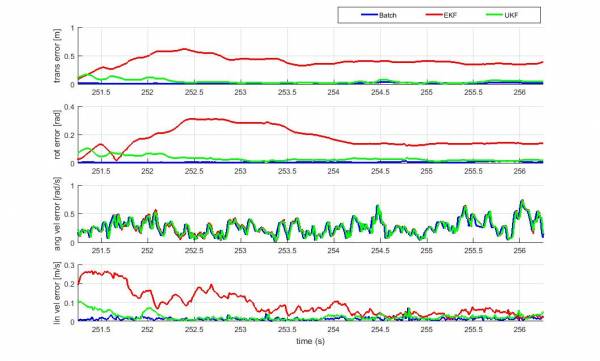

Estimated Trajectory

Figure shows the norm errors of translation, orientation and velocity versus the ground truth. While the error of batch is nearly zero, the EKF and UKF all have significant errors in the beginning of the data set, but share a tendency to decrease as time passes. In the beginning, the translational and rotational errors are accumulated quickly due to the relatively large amount of velocity error. However, as our estimator collects more data, the estimated velocity becomes more accurate and hence the position and orientation errors are apparently reduced.

UKF Parameters

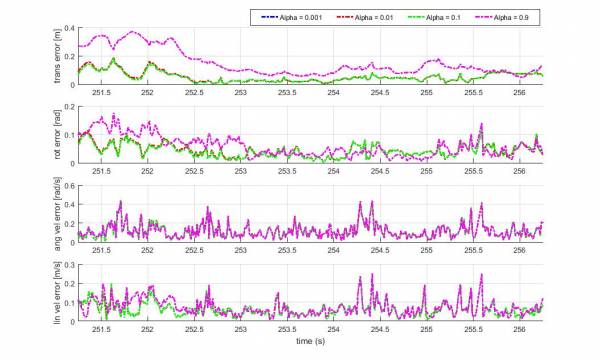

Alpha

Since the range of $\alpha$ is from 0 to 1, we chose 0.001, 0.01, 0.1 and 0.9 as the value of $\alpha$ for testing. The estimated norm errors versus batch's is shown in Figure. When the value of $\alpha$ is far from the boundary, hence small, indicating that sampled sigma points are near to the previous mean state, the estimated errors are relatively small and share a similar pattern. Nevertheless, as the value of $\alpha$ approaches 1, the estimated errors grow dramatically.

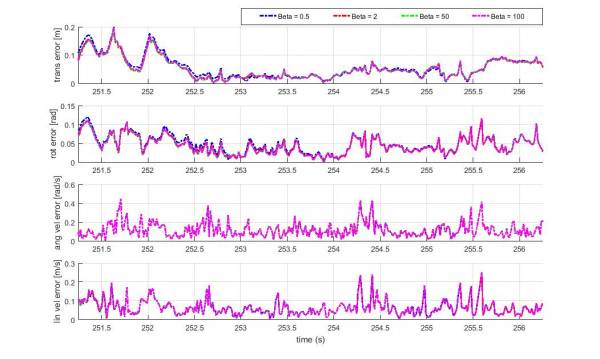

Beta

For Gaussian distribution, the optimal $\beta$ is 2. Based on this prior knowledge, we selected 0.5, 50 and 100 as the values of $\beta$ for testing. In Figure a slightly larger norm error can be seen from the estimation with $\beta$ = 0.5, while the other results are almost totally overlapping. To figure out the most suitable value of $\beta$, we further calculate the total norm errors of the whole data set and normalized them with the results at $\beta$ = 2.

Iterated EKF and Iterated UKF

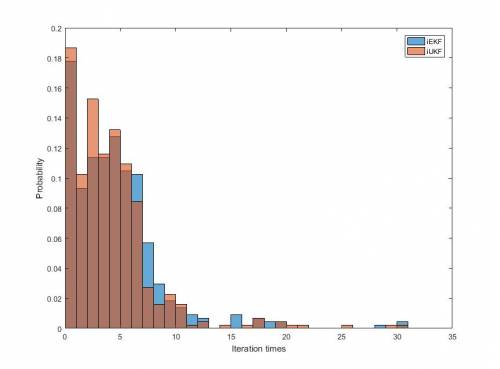

From the previous results we know the UKF's estimate accuracy is generally higher than the EKF's. Therefore, it is clear that iUKF takes fewer iterations to converge than iEKF. Figure shows that iUKF is more likely to converge within a few times than iEKF, which has higher probabilities to take more iterations.

However, since the computation time the UKF needs in the measurement update step is much longer than in EKF, the total computation time of iUKF is significantly longer than iEKF, as shown in Figure.

Conclusion

In this report, we presented and evaluated the performance of our estimator, which uses the UKF algorithm to estimate the robot pose by VI sensor measurements. First, we have shown the UKF is able to provide a more accurate estimation than the EKF, in spite of the 9 times of computation time, which is however, still short enough for our system requirement.

Furthermore, we roughly tune the value of the UKF's parameters, $\alpha$ and $\beta$, and judge their influences on the results. Although their effects have not been deeply investigated, we prove the UKF's performance can be further improved by setting its parameters properly.

In the end, we introduced an iterated way to run the EKF and UKF, namely iUKF and iEKF. While the UKF's estimation is better than the EKF's, the iUKF is not competitive with the iEKF at all, due to a similar accuracy, but extremely larger computation time. Although the terminal criterion could somehow affect the concluding judgment about their performances and it cause this comparison is tricky, the iUKF significantly slow computational speed definitely lead to an inferior position in the competition.