Sidebar

<latex>{\fontsize{16pt}\selectfont \textbf{Visual Perception System for Hydraulically Actuated Quadruped Robot}} </latex>

<latex>{\fontsize{12pt}\selectfont \textbf{[Silvio Hug]}} </latex>

<latex>{\fontsize{10pt}\selectfont \textit{[Master Thesis, ME]}} </latex>

<latex> {\fontsize{12pt}\selectfont \textbf{Abstract} </latex>

The goal of this project is to develop a localization and mapping system for a hydraulically actuated quadruped robot. To perceive the environment a stereo camera is used. The stereo images are matched to create a disparity map. The current images are compared with previous images to estimate the state of the camera. This estimations are refined with inertial measurements. The disparity map is fused with the estimated state to generate a three dimensional map of the environment. This thesis focuses on the generation of the disparity map and the estimation of the state.

For the calculation of the disparity different algorithms are available. Four of them are analysed and tested in this thesis. Speed and quality improvements for the different algorithms have been implemented to increase their performance.

For the state estimation two visual estimators and one visual inertial estimator are analysed. The performance of them is tested to identify the one which suits our application best.

The disparity algorithm and the state estimator with the best performance are implemented on the robot.

<latex> {\fontsize{12pt}\selectfont \textbf{Procedure Dense Map from Stereo Camera Images} </latex>

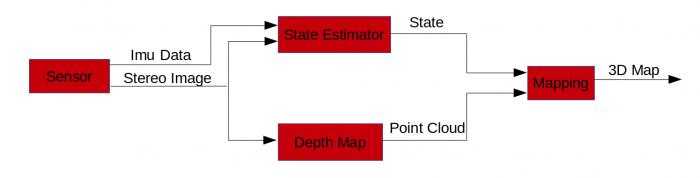

For our application we use stereo camera with an integrated inertial measurement unit (IMU). The procedure to create a dense map out of stereo images is presented in the figure below.

The sensor records stereo camera images and inertial data. By matching features on the current stereo images to the corresponding features in previous images the state of the robot is estimated. Using the inertial data from the IMU the estimation of the state is refined. Further on, the stereo images are used to calculated the depth of each pixel applying triangulation. Using the camera calibration parameters the pixel points are projected to three dimensional points in space. This point cloud is fused with the estimated state to generate a dense map of the environment.

<latex> {\fontsize{12pt}\selectfont \textbf{Hardware Systems} </latex>

Different hardware systems are used for testing and perception. We use a computer with a Nvidia graphics card for testing the algorithms. To implement the vision system on the robot a Nvidia Jetson TK1 Development Kit is installed. This platform has been chosen, because it contains a quad-core ARM processor and a GPU with 192 Cuda cores for parallel processing. Further more, it has a low weight and low power consumption. For perception the robot uses a VI-Sensor from skybotix. It includes a stereo camera and an inertial measurement unit.

<latex> {\fontsize{12pt}\selectfont \textbf{Depth Map} </latex>

From two stereo images the depth of each pixel can be calculated by triangulation. For a simplified case two cameras with the same orientation, equal focal length f and parallel optical axes are assumed. Therefore, the following equation for the depth z holds.

<latex>

\begin{equation}

z = b\cdot\frac{f}{u_l - u_r}.

\end{equation}

</latex>

The depth is inversely proportional to the disparity $u_l - u_r$. To get a disparity map of the whole image, each pixel from the left camera image has to be matched to the correspondig pixel in the right camera image.

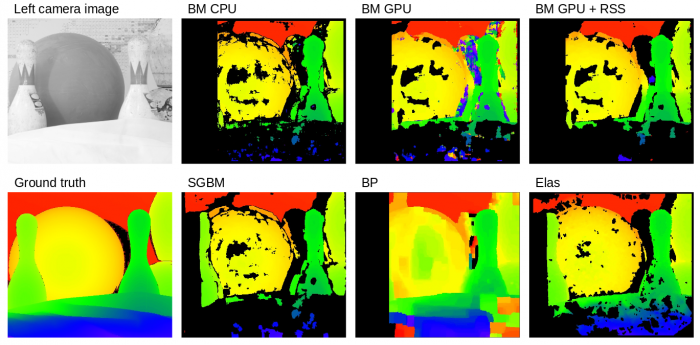

For the calculation of the disparity of camera images different algorithms exist. In this thesis the four algorithms Block Matcher (BM)1), Semi Global Block Matcher (SGBM)2), Belief Propagation (BP)3) and Efficent Large-Scale Stereo Matching (Elas)4) are analysed and tested.

The parameters of the algorithms are tuned to get high quality disparity maps. Additional filters are applied for further quality improvements.

To increase the speed of the algorithms different optimization strategies are applied: The disparity algorithms are applied on downsampled images, vectorized instructions are used and the algorithms are parallel processed on the CPU and GPU.

The resulting disparity map of the algorithms is presented in the images below, where the algorithms are applied on a test image5).

<latex> {\fontsize{10pt}\selectfont \textbf{Performance of Disparity Algorithms} </latex>

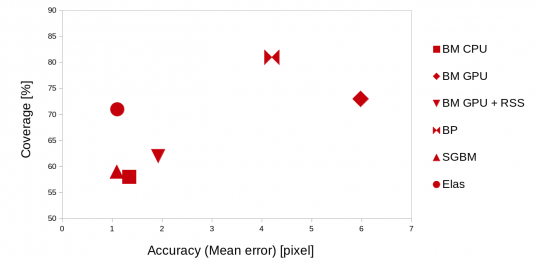

To dermine the performance of the different algorithms the quality and speed of each of them is calculated.

For the rate of quality the two factors accuracy and coverage are calculated. For the accuracy the difference between the disparity maps and ground truth images is determined. The coverage is defined as the number of detected disparity values.

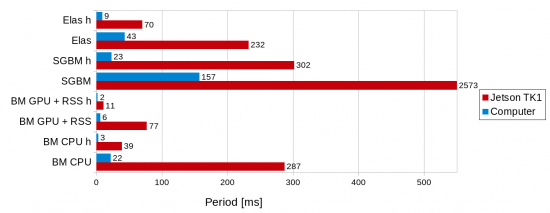

The speed of the algorithm is measured by applying the algorithms on the same test images. The speed is tested on the computer and on the Jetson TK1 board.

The results of the tests are presented in the figures below. Some adapted versions of the algorithms are also presented in the graphics. These are the BM running on the GPU and a version of the BM where an additional postfilter (RSS) is applied.

Quality

Speed

<latex> {\fontsize{10pt}\selectfont \textbf{Implementation} </latex>

Finally, on the Jetson TK1 a ROS node is implemented which directly connects to the camera images from the stereo camera and produces disparity maps in real time. The three algorithms BM CPU, BM GPU + RSS and Elas are installed. These algorithms have been chosen because of their performance in the tests.

<latex> {\fontsize{12pt}\selectfont \textbf{State Estimator} </latex>

From a set of camera images, the motion of the camera can be calculated. Therefore, features points in the current image are detected and matched to the previous images. Depending on the changes of their position on the images, the relative position and orientation changes of the camera can be estimated.

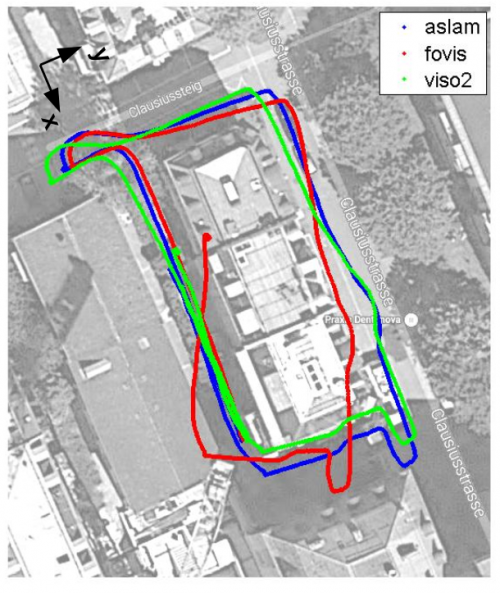

Two visual state estimators Fovis6) and Viso27) and one visual inertial state estimator Aslam8) have been analysed and tested in this thesis. The estimators are applied on test videos generated with the skybotix sensor. The result is presented in the figure below.

<latex> {\fontsize{10pt}\selectfont \textbf{Implementation} </latex>

Currently the two visual state estimators are implemented on the Jetson TK1 board. Some adaptations have been made to run them on the ARM processor. The Aslam estimator is running on the computer. For the implementation of this algorithms on the Jetson TK1 board some further adaptations have to be made.

<latex> {\fontsize{12pt}\selectfont \textbf{Conclusion} </latex>

Four disparity algorithm have been analyzed and the quality and speed of them is determined. The algorithms showing the best results are implemented on the Jetson TK1 board to process the camera images from the skybotix sensor and generate disparity maps in real time.

Two visual and one visual inertial state estimator have been tested. Two of them are implemented on the Jetson TK1 board. The implementation of the visual inertial state estimator on the Jetson TK1 board should be done in a further step.

Further on, the mapping process has to be implemented, which fuses the data from the state estimator and the disparity maps.